Indexes: A Silent Frenemy

Knowledge of how your database indexes work can help you build more efficiently. Through indexes, one can expect improved performance and reduced work for queries. However, tables without indexes are common, and for many, that's OK. Indexes can be hard to get right, but they are hugely valuable for scale.

By reading this blog post, you should walk away with the ability to confidently use indexes. We'll start by recapping a few foundational concepts using Postgres as an example and then we'll dive deeper into how indexes work in Materialize.

Indexes are a common way to enhance database performance. An index allows the database server to find and retrieve specific rows much faster than it could do without an index. But indexes also add overhead to the database system as a whole, so they should be used sensibly

The tradeoff is clear: performance on one hand and overhead on the other. Database administrators (DBAs) have topic mastery. Many teams without DBAs distribute the expertise among their members, with only a few willing to build and maintain indexes.

The Optimizer

What is the optimizer and why should I know about it?

Whenever a SQL query arrives at Postgres, the optimizer, also known as the planner, will create a plan to find the fastest path to return the results. To support its decisions about which plan is better, it will assign a cost to each using multiple factors. The strategy's name is cost-based, where a lower cost means a more optimal plan. Cost is related to disk page fetches. The factors range from disk block usage to statistics about columns and values.

Running queries and the ANALYZE command helps the optimizer better understand the database content and calculate the correct costs.

When the optimizer does the job wrong, it becomes a frenemy, like Gimli and Legolas from Lord of the Rings and its worst enemy is how to optimize joins, not us! It's essential to remember that it always tries to help. Anyway, if it returns an undesired plan it's possible to refine how it calculates the costs.

Debugging how it works can be a fun or stressful task. Reverse engineering over simple queries is fine. The struggle appears at the same pace as the query's complexity. Understanding how the optimizer works at a high level generates awareness about the chances of having useless indexes.

Experimenting

Begin by creating a table in Postgres containing a handful of random contact names and phones with two indexes, one for the name and one for the phone number. It's a simple use case where an application retrieves a particular contact by name or phone.

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

7 | |

8 | |

9 | |

10 | |

11 | |

12 | |

13 | |

14 | |

15 | |

16 | |

17 | |

One of the two indexes is useless. Can you guess which?

The best way to answer the question is to run the queries over the table using the command EXPLAIN to understand which plan the optimizer chooses. Avoid using \timing to measure if an index is in use. It is helpful to understand a query's overall performance, including the latency to the database, but not to tell if the query is using an index.

Explaining a query's plan to retrieve a contact by its name in Postgres looks as follows:

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

The line starting with Seq Scan on contacts indicates that the optimizer opts to scan the table (Seq Scan) rather than using the index, and the cost also appears as the same as the estimation for the rows and width (average row size in bytes). For someone learning indexes, it can sound counterintuitive, but remember that the optimizer always tries to find the fastest path to return the results.

Reading the disk for multiple possible adjacent rows with the name "Kelly" is faster than going through the index, checking the name, and then immediately reading the disk. This suggests that the index contacts_name_idx is useless and increases overhead without any gain for this particular query, where all the values for a column are the same. The overhead presents silently and in different ways, like increased writing times, maintenance, or storage.

Four different scans are available in Postgres. It's commonly thought that an index will always be faster, but that isn't always the case.

- Seq Scan: Scans the whole table (A.K.A full table scan).

- Index Scan: Scans the index and for each match goes immediately to the table.

- Index Only Scan: Scans only the index, relying on the VACUUM and the visibility map.

- Bitmap Index Scan: Scans all it needs from the index and then goes to the table.

Now let's take a look at how Postgres explains a query filtering by a contact's phone:

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

The optimizer refers to the index contacts_phone_idx in its fastest plan path. The distribution of data values is essential for its usage. Having a column with equal values will not affect the optimizer's decision. This is clear when inserting millions of contacts with the same name and prefix but different phone numbers.

1 | |

2 | |

3 | |

4 | |

5 | |

For personal experimentation, reuse a sample of data from a production table and run VACUUM and ANALYZE after any import. It will reflect the production use cases and a similar distribution of data values.

A silent, growing enemy

Mordor grew in silence. How was it possible? Mountains on three sides surrounded it.

Indexes are also behind other objects, like tables or views. Developers and business analysts interact only with these objects, leaving index usage implicit. If no one checks the indexes, they can grow big or inefficient, as shown by the experiment. Usage around schema changes all the time: a new feature or requirement appears, the query switches columns or filters, resulting in a different plan.

Even with new mechanisms and tools to control the ecosystem status, keeping an eye on the indexes is a healthy practice.

Transitioning to the streaming world

So far, this blog post has talked about Postgres indexes and other related topics, but this is wildly different from how indexes work in Materialize.

The implementation of Postgres dates back to 1986 with its initial release in 1996! At the time disks and their cost significantly influenced how technology was designed. And so, Postgres and its index by default, B-Trees, were thought about with disk usage in mind, not with real-time memory-intensive tasks in mind.

With data reading and processing happening on the fly, today's streaming world uses memory as the primary storage layer, which has caused us to rethink how indexes work. At Materialize we use a new structure called arrangements.

What are arrangements?

Arrangements are the internal structure in Materialize, just as B-Trees are in Postgres. The fundamental difference is that arrangements are an in-memory data structure and have been designed with a focus on data streaming. If you're familiar with LSM-Trees, it's a particular implementation where each record ends up in memory, with an efficient 1

1

read/write ratio.

Materialize has a rule-based optimizer that follows a particular set of rules to decide which is the fastest path to return the results rather than calculating different costs. If the optimizer detects that an index (i.e. an arrangement) will speed up the results, it will use it. Additionally, one of the most standout features of Materialize's optimizer is its strong query decorrelation support to optimize subqueries.

Sharing is caring

Memory is a powerful but scarce resource, like gold is for Smaug. Materialize reuses indexes (arrangements) in its internals to achieve better performance while reducing redundancy and overhead — a common approach in relational databases but not so much in streaming frameworks. Another notable feature of Materialize's internal structure is arrangements. For cases using joins it is a significant advantage, as shown in the next section.

Going back to our contact's table example, the Materialize optimizer will use the indexes and speed up both queries, but will the indexes get shared?

It will depend on the downstream usage. Defaulting to a materialized view by selecting only one of the two indexed columns in the last experiment would reuse the index and avoid redundancy; selecting all the columns would create a new index since there is no existing index that suits the needs.

Experimenting in Materialize

After creating the same experimental schema as Postgres but in Materialize, let's create a materialized view using the contacts table:

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

7 | |

The materialized view will need the same index as the one in the contacts table, so Materialize will reuse the index! You can see that Materialize is sharing and reusing the index by taking a peek into the system catalog:

1 | |

2 | |

3 | |

4 | |

5 | |

6 | |

7 | |

8 | |

9 | |

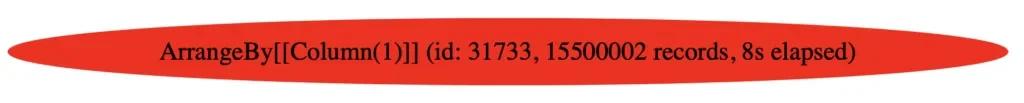

count denotes the number of times Materialize reuses the arrangement, in this case for the operator that handles the records in memory (ArrangeBy[[Column(1)]]). It is currently reusing it three times, one for the table and two for the materialized view. Reusing the table's index saves more than 1.3GB of memory in this case!

Joins benefit hugely from shared arrangements. Always consider them to help improve overall efficiency.

Forecasting

Checking Materialize's memory consumption is tricky. Most of the information concerns the number of records. The memory usage tool provides information about the plan and records kept in every index depicted as a red node. While trying to forecast how big the memory consumption will be for a table, view, or index, use the average size of the record and multiply by the number of records that will reside in memory.

Materialize indicating the records in memory for an operator's arrangement

The same information is available in Materialize's catalog mz_arrangement_sizes.

If you're interested in diving even deeper into how indexes work in Materialize, try Materialize locally using our docs, join us in the Community or set up a demo with our team.