We’ve built Materialize as a stream processor wrapped in a database because we think that this is the best abstraction to serve the broad majority of operational data use cases not served well by traditional databases (wrong computation paradigm) and not served well by stream processors alone (too complicated.)

The database abstraction means Materialize doesn’t deliver raw stream processing. Instead, it presents as a Postgres wire-compatible database with incrementally updated materialized views, a feature that people have been asking for in databases for 30 years. As a bonus the streaming engine also gives us streaming inputs and outputs, and even subscriptions to queries.

This is a very intentional design decision, because so many of the engineering teams we’ve talked to tell us they are spending too much time and money on complicated, undifferentiated work of building, configuring, and maintaining a set of services in and around stream processors that, when you take a step back and squint, looks a lot like a database.

While many of our customers are happy to never think about stream processing and just get fast incremental view maintenance, there are others who are already using stream processors like Flink and Spark Streaming and many of them reasonably ask:

“When would I be better off using Materialize, over a Stream Processor. Why?”

What follows is an answer in two parts: (1) Technical Requirements, and (2) Operational Considerations.

important

If you have a use-case in mind, you can always judge for yourself if Materialize is a better fit for your work by trying Materialize out free here.

Before we start: It’s not a direct comparison

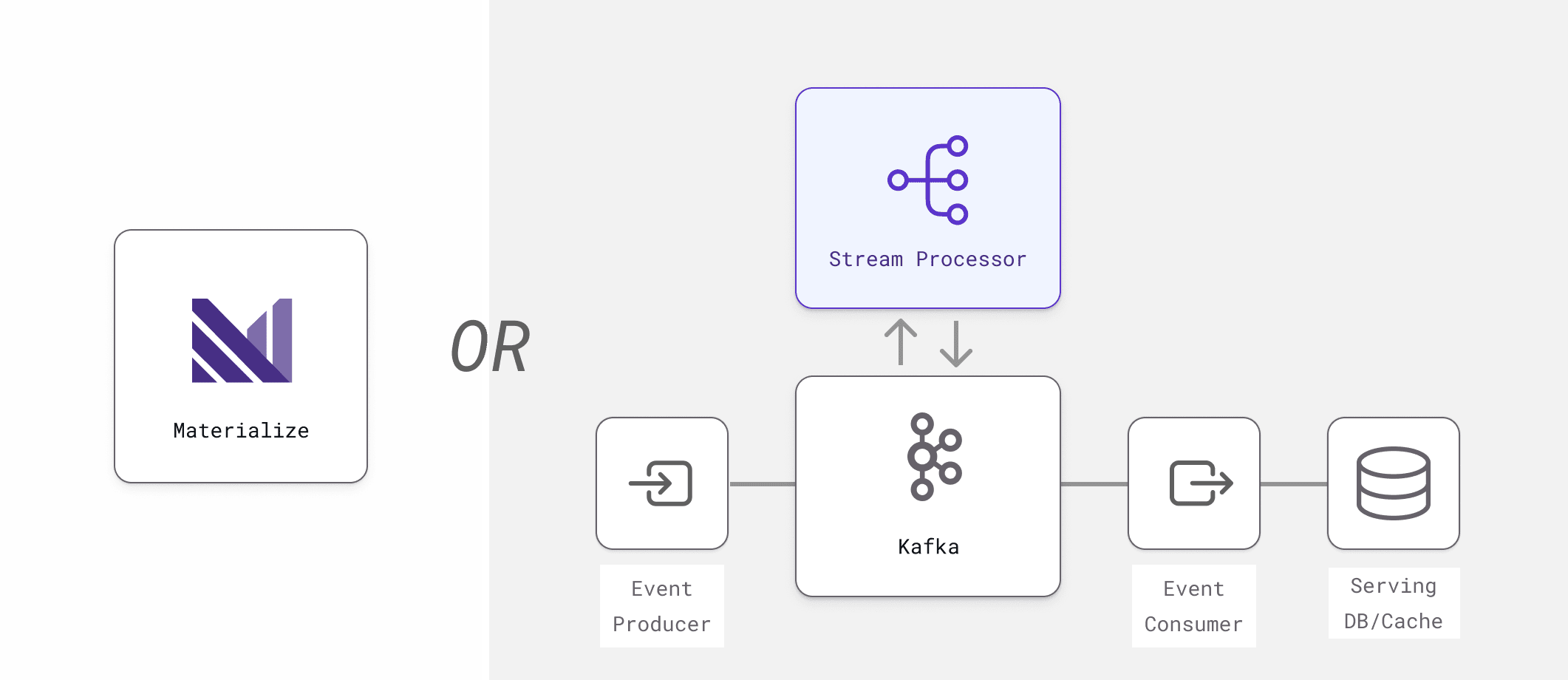

Materialize is one level above stream processors in the software abstraction stack. The stream processor at the heart of Materialize, Timely Dataflow, is mature and stable open source software originally developed at Microsoft Research starting more than 10 years ago. All of the development work at Materialize since its founding in 2019 has been focused on building the database abstractions and distributed architecture around the core stream processing library.

With this in mind, compare Materialize to the larger capability that a stream processor is part of creating, and the larger architecture around a Stream Processor like this:

A stream processor might handle the core transformation, but it relies on Kafka for intermediate storage, connectors to get data in and out, and a DB or Redis for a serving layer. Stream Processors involve orchestration of multiple services, whereas Materialize abstracts that away presenting to the user as a streaming-first data warehouse monolith.

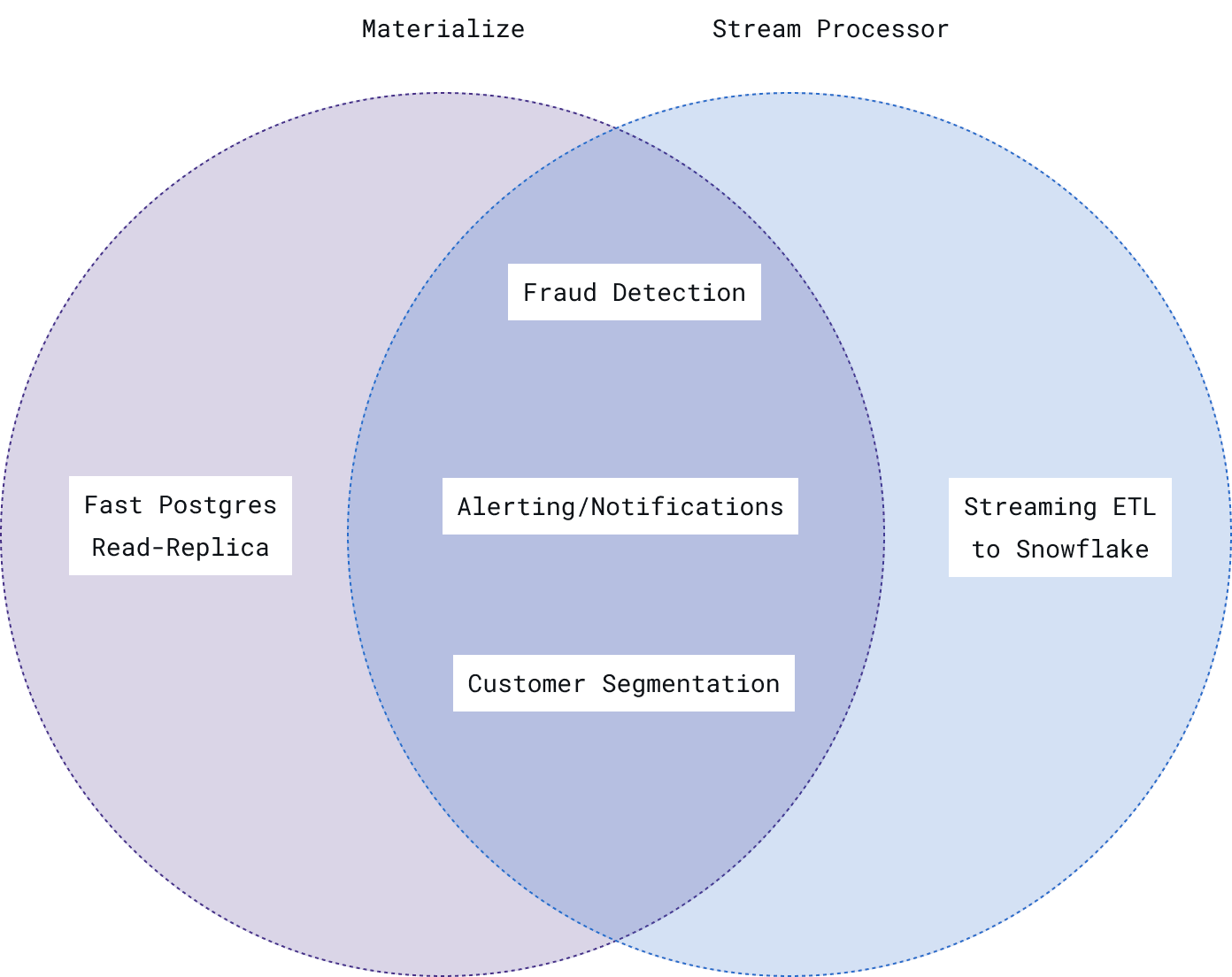

Because the feature set of Materialize and Stream Processors are so materially different, there are Materialize use-cases that nobody would ever use a Stream Processor for, and stream processor use cases that nobody would ever use Materialize for:

Technical Requirements

While there are no absolute indicators here, what follows are three key questions, the answers to which provide reliable signal that a project fits better in Materialize or a Stream Processor.

Is the data originating from a database?

Yes? -> Materialize

The most obvious reason for this is JOINS. If your data is coming from a database, it is likely very relational in structure, and you are likely going to need JOINs to produce the transformations you need. Materialize is purpose-built to handle streaming joins with the exact same SQL semantics and flexibility of traditional OLAP databases. (Materialize can even use shared indexes to enable multiple views joining on the same base data with no incremental resource cost.) Stream processors tend to have a much more limited and manual approach to facilitating JOINs.

The other factor is transactional consistency. Whether connected directly to your PostgreSQL DB, or via CDC stream to other databases, Materialize maintains strong consistency and transactional guarantees that allow you to treat it almost like a read-replica of your database.

For example: If a single transaction in the upstream database creates 50 change records, Materialize won’t show results until all 50 changes have been processed. With other stream processors, this level of consistency is sometimes possible, but it’s not the default, it needs to be manually configured which takes work.

Could the workload fit in a database?

No? -> Stream Processor

Imagine you have a PostgreSQL database with performant incremental view maintenance. There are no limits on SQL syntax or complexity, you can do complex joins, CTE’s, you can create views on views, etc… Could you run your workload there?

When judging whether your use case fits in a database, think about SQL and scale:

Does your model fit in SQL?

Can you express the transformations you need in SQL? Is it a good fit? While SQL (the dialect that Materialize supports, with recursive SQL) is Turing complete, that doesn’t make it a wise choice in all scenarios.

Can you put finite bounds on the data you need to work with?

There are no magic beans in databases. If the source data needed to compute your transformations wouldn’t fit in a (very large) PostgreSQL Database then you may hit scaling issues in Materialize. Fortunately, there are a few ways to handle massive throughput of data while still working within finite bounds:

- Unbounded Change on Bounded Datasets - The stock market is a great example: A table of stock price by ticker is changing continually, but the number of rows (publicly traded companies) is relatively finite. Every database grows in size over time, so it’s not to say that a growing dataset is untenable for Materialize, but update-heavy workloads in Materialize are most efficient.

- Bounded Time Windows - Say you do have a stream of data that, given enough time, wouldn’t fit in a Postgres Database. Impression data for a massive ad network, for example. One easy and performant way to “make it finite” is to set a rolling window of events you are interested in working with… I.E. “Only materialize the last 90 days of data” is an easy and efficient way to make computations performant in Materialize.

Are you creating or transforming state?

Creating State? -> Stream Processor. Transforming State? -> Materialize.

When you want to express that data should become immutable after some moment but not discarded, you want a stream processor. That is not view maintenance (views are mutable and always update when the underlying data changes!).

- Example of Creating State = One-in, one-out event enrichment. When you use a stream processor to take a feed of transactions, use the IP to enrich with lat/lon coordinates, and push the event back out.

- Example of Transforming State = Use Materialize to pull in a source table of transactions and a source table of lat/lon coordinates by IP. Join the two in an incrementally maintained materialized view.

You can imagine the different implications of each approach. The first is immutable by design, if the IP/Geo lookup is improved, the data will only be reflected in transactions going forward. The second is mutable by design, if IP/Geo lookup is improved, historic transactions will be affected.

Keep in mind, if you’re coming from a background in stream processing, you may be used to looking at things through the lens of “creating state” but the same problem can often be solved both ways.

Do you need a serving layer?

Yes -> Materialize.

If the work done in a stream processor needs to be written out to a cache or a database to properly solve the use case, you may be able to consolidate two services into one by using Materialize. Besides the obvious benefits of simplicity, handling the serving layer in Materialize can have a few non-obvious benefits:

- Lower end-to-end latency - Especially if you are syncing from a stream processor to a database.

- Stronger consistency guarantees - It’s easy to assume you get the consistency guarantees of whatever database or cache you write to from a streaming job, but you also have to carefully consider how your stream processor writes updates.

- Straightforward restarts and recoveries - When all state is managed in a single system, the process to restart or recover from failure requires fewer coordination steps.

There is a failure isolation benefit of using a separate serving layer (if the stream processor goes down, the serving layer can still serve stale results) but a similar level of isolation can be designed within Materialize using separate clusters and materialized views.

Operational Considerations

In a steady state (no change to upstream or downstream systems, data structure, no change to transformation logic) both Materialize and Stream Processors are stable and predictable with standard monitoring and operating procedures.

Operational considerations can be framed around changes (planned and unplanned) to the surrounding systems and the transformation logic itself.

Who/what is the use case for?

Some amount of the success of cloud data warehouses and the ELT paradigm (Extract data, Load all raw data into storage layer, Transform it in SQL) can be attributed to the fact that they empowered analysts, data scientists and operations teams to own the entire pipeline logic in a language familiar to them: SQL.

Materialize does the same for streaming workloads. So if, based on the use case, you expect to see frequent evolution of the transformation logic requested from teams unfamiliar with writing and debugging stream processing jobs, Materialize might be a better choice because it empowers a broader base of contributors (anyone who knows SQL) to self-serve.

If, on the other hand, you have a single use case where frequent change is not expected (e.g. invoice reconciliation), the fact that transformations are defined in SQL may not bring any tangible benefits.

Is there existing SQL?

If you have a lot of business logic already defined in SQL and dbt because of existing batch systems, the majority of it can be ported directly to Materialize. Anecdotally, customers have reported that the level of change required to move SQL from a cloud data warehouse to Materialize is similar to moving between Redshift and Snowflake.

Even when stream processors have SQL API’s, they are not expecting anyone to copy/paste SQL from a database and have it work. The SQL API is meant as a simplified control interface, and users still need to rewrite their logic to account for the specifics of the underlying streaming system.

Conclusion

Ultimately, we’ve built Materialize as a stream processor wrapped in a database because we think that this is the best abstraction to serve the majority of current and emerging use cases that are not served well by databases (wrong paradigm) and not served well by stream processors alone (too complicated.) But, there will always be a set of use cases and situations that are better served by using a stream processor directly.

At the end of the day, the best way to evaluate tool/use-case fit is to get hands on with each tool and set up a proof of concept or prototype. If you’d like to test out Materialize, you can do so free by registering here!