Re:Inventing Real-Time Data Integration

Over the last week at AWS re

Invent

, I had the chance to speak with dozens of engineering and data leaders about their real-time data integration and transformation challenges. These conversations echoed many of the major themes spotlighted in the AWS keynotes. Here are my top four takeaways from the event:

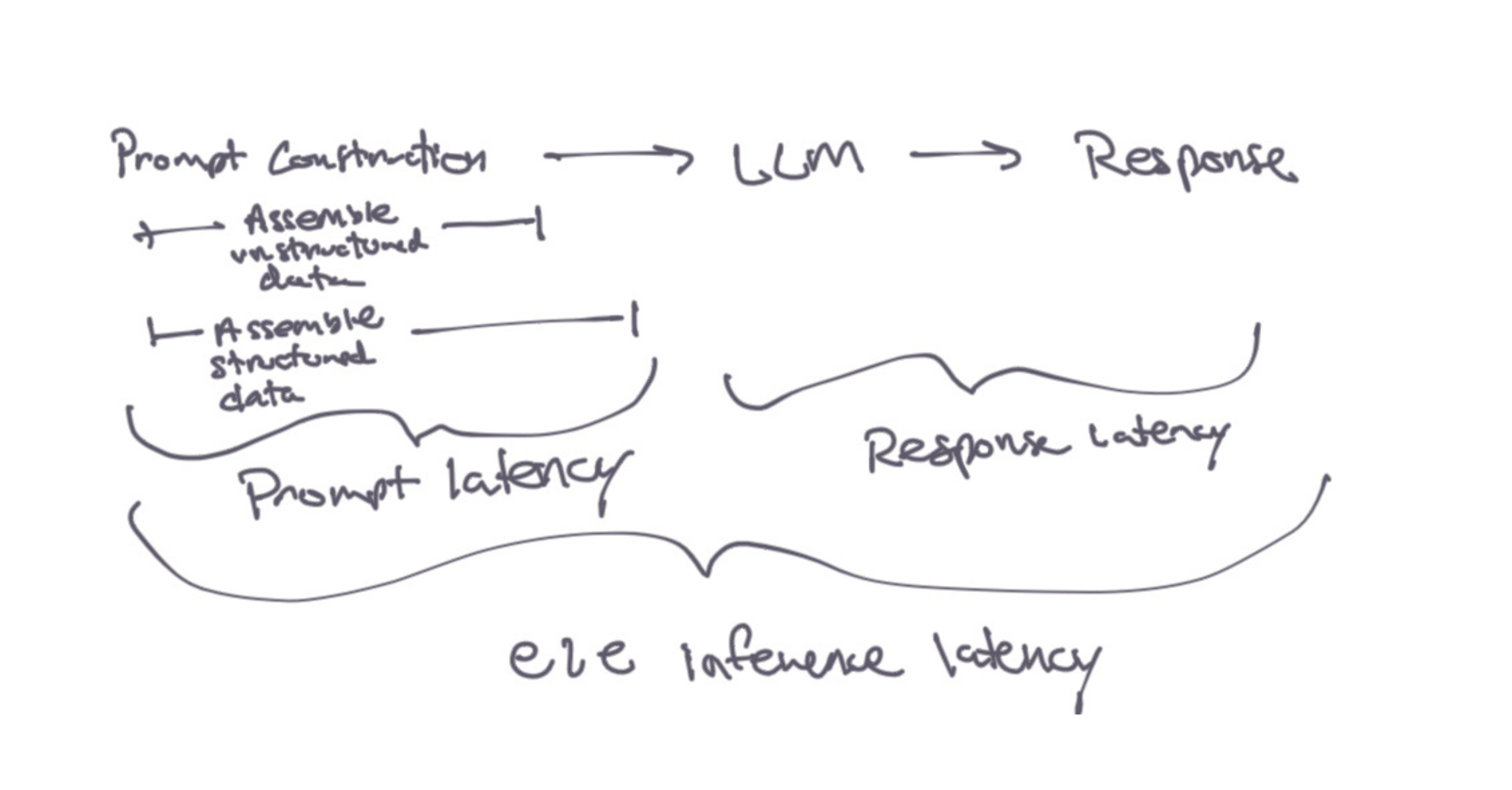

1. Unlocking LLM Potential with Real-Time Structured Data

The real power of LLMs is unleashed at the moment of inference – that moment when models produce outputs that drive real-world decisions. But even the most advanced models won’t deliver impactful outcomes unless they are given prompts that are enriched with an organization’s unique data. Techniques like vector queries have streamlined access to unstructured enterprise knowledge, but integrating real-time structured data into retrieval-augmented generation (RAG) pipelines is the next frontier.

As these inference pipelines evolve, a critical challenge is minimizing end-to-end inference latency. This latency includes not just model response time but also the time it takes to consolidate disparate data sources into a prompt. This is a requirement for putting LLMs in the hotpath of online or operational systems.

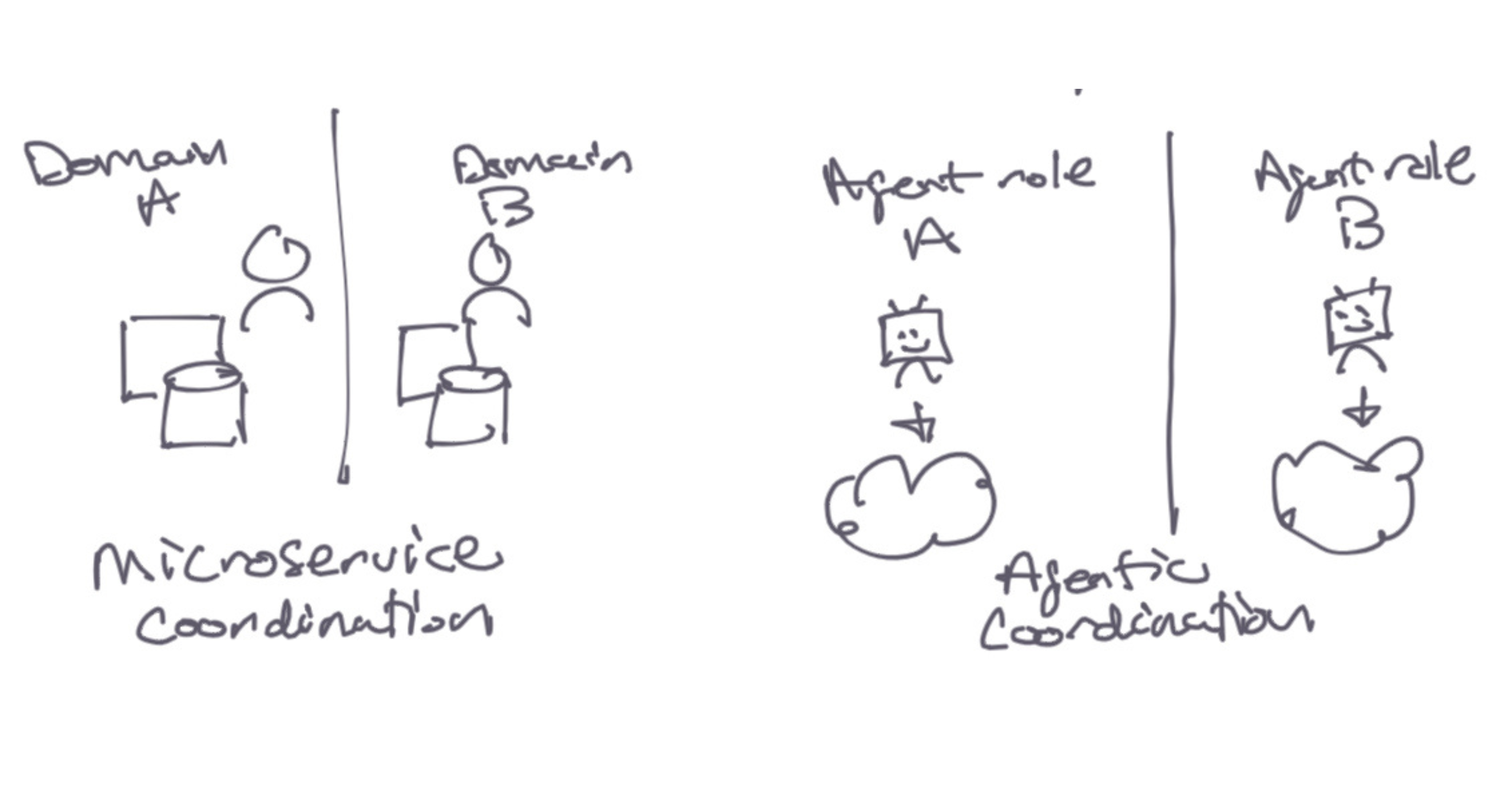

2. Managing Complexity and Uncertainty with Microservices

A recurring theme from AWS and their customer stories was the role of microservices—and the organizational structures they support—in managing complexity. By enabling autonomous, loosely coupled teams, microservices provide a blueprint for scalability and resilience. However, challenges arise when teams need to share state across services.

Traditional methods for sharing state risk introducing dependencies that bottleneck progress. Teams need mechanisms to produce and consume live data products with consistent APIs, allowing stable state sharing without exposing implementation details or forcing interdependent iterations. Striking this balance is essential for scalable, high-performing teams.

3. Agentic Workflows: Coordinating AI Agents at Scale

The rise of agentic architectures, where autonomous LLM-powered agents accomplish tasks on behalf of users, was another highlight. Agents and microservices share a crucial requirement: decentralized yet consistent data sources for effective coordination. Agents thrive by navigating dynamic environments, achieving goals while respecting evolving guardrails. While traditional data meshes offer a unified view of enterprise data, their reliance on lengthy ETL pipelines often makes data too stale for real-time needs. To support agentic and microservice workflows, an operational data mesh is needed—one that delivers enterprise data that is always correct, fresh, and available with low latency.

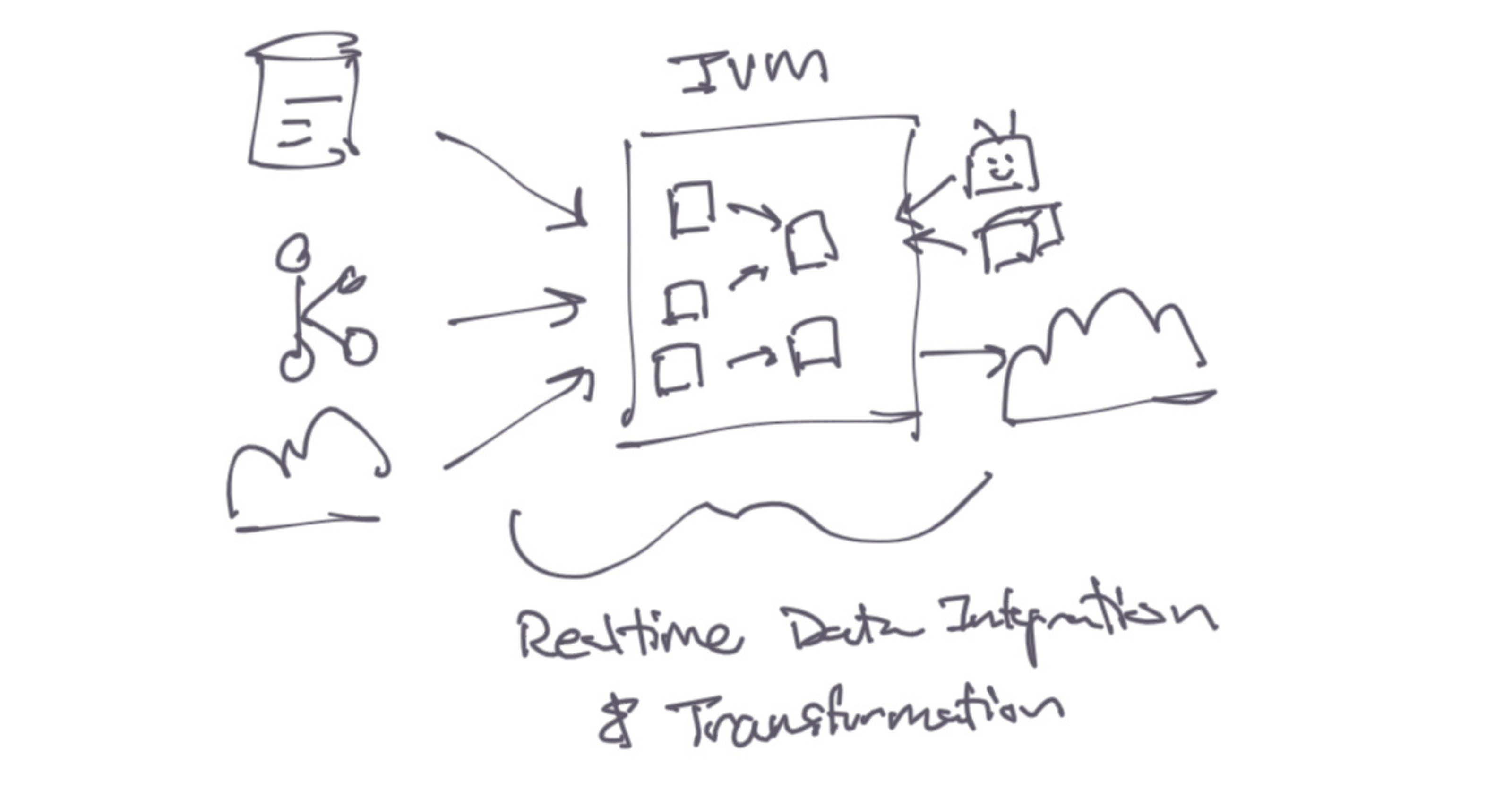

4. Architectural Simplification Through Core Primitives

AWS and their customers shared hard-earned lessons on complexity: it’s never eliminated, only shifted. The key is to hide complexity behind simple, reliable building blocks. With the right primitives, teams can build and evolve systems seamlessly. Without them, complexity leaks out, stalling progress.

A recurring pain point for many at re

Invent

was the effort required to make trustworthy, transformed, and fresh data available across systems. Issues ranged from buggy application logic handling data transformation, to standoffs between DBAs and developers, to sprawling pipelines smearing complexity across architectures. A clear missing piece is incremental view maintenance, which makes fresh, accurate data readily accessible for modern applications.

Looking Ahead

AWS re

Invent

showcased a cohesive AI vision from Amazon. Real-time data integration is set to be a defining topic this year, accelerating innovations from context-rich RAG pipelines to more capable AI agents. At Materialize, we’re excited to contribute with a real-time data integration platform that uses SQL to transform, deliver, and act on fast-changing data. We run anywhere your infrastructure does. If you’d like to learn more, check us out here!