OLTP Workloads: Offload Complex Queries From Your Operational Database

The OLTP (online transaction processing) database is so ubiquitous, we hardly notice it. Every day, millions of consumers swipe credit cards, withdraw bank funds, order inventory, and perform other essential transactions. These actions seem unremarkable to us. But without them, the global economy wouldn’t function.

In recent years, much commentary has been devoted to data fabrics, LLMs, and other facets of the modern data stack. A victim of its own success, OLTP has generated comparatively little discussion. However, data teams can gain more by optimizing OLTP than by dabbling in the latest data trends.

One of those key optimizations is OLTP offloading. OLTP offload — also referred to as query offload — is when expensive queries are moved off of an OLTP database and performed on other data systems. This improves performance and stability, cuts costs, and preserves systems of record.

Many teams turn to read replicas and other bandaids to execute OLTP offload. But these stopgaps are not durable in the long-term. To perform effective OLTP offload, teams need an operational data store.

Read the following blog to learn about OLTP vs. OLAP, problems with complex OLTP workloads, and the case for OLTP offload.

The following is the first part of our blog series on OLTP offload. For the full text, download our free white paper now.

OLTP Database: Definition, Architecture, Use Cases

Online transaction processing (OLTP) is a type of data processing that enables the rapid execution of database transactions. A database transaction is a change, insertion, deletion, or query of data in a database.

OLTP databases must always be available, correct, and low latency to keep a business operational. “Online” means that these database transactions occur very quickly — often in milliseconds. This is why OLTP is ideal for transactions such as purchases, reservations, and deposits.

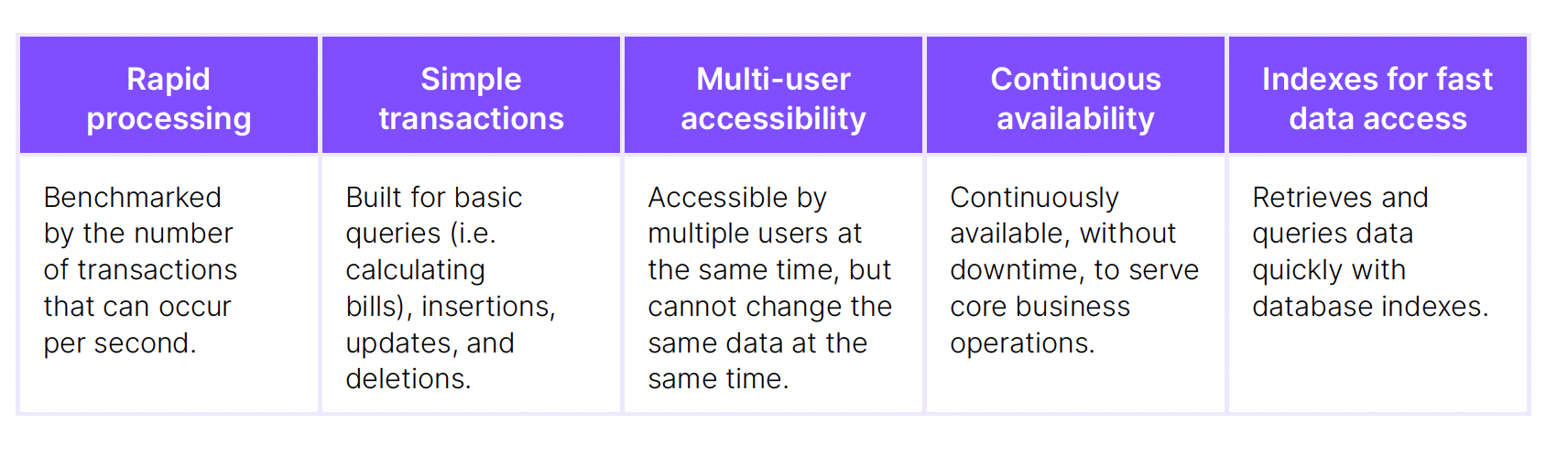

Developers build services that write to and read from OLTP databases. These services power the core operational tasks of a company. OLTP systems are typically used by frontline workers, such as cashiers, bank employees, and restaurant servers. OLTP databases are also employed for self-service applications, including online banking, e-commerce, and concert ticketing services. View the table below to learn about other key features of OLTP.

OLTP: Key Features

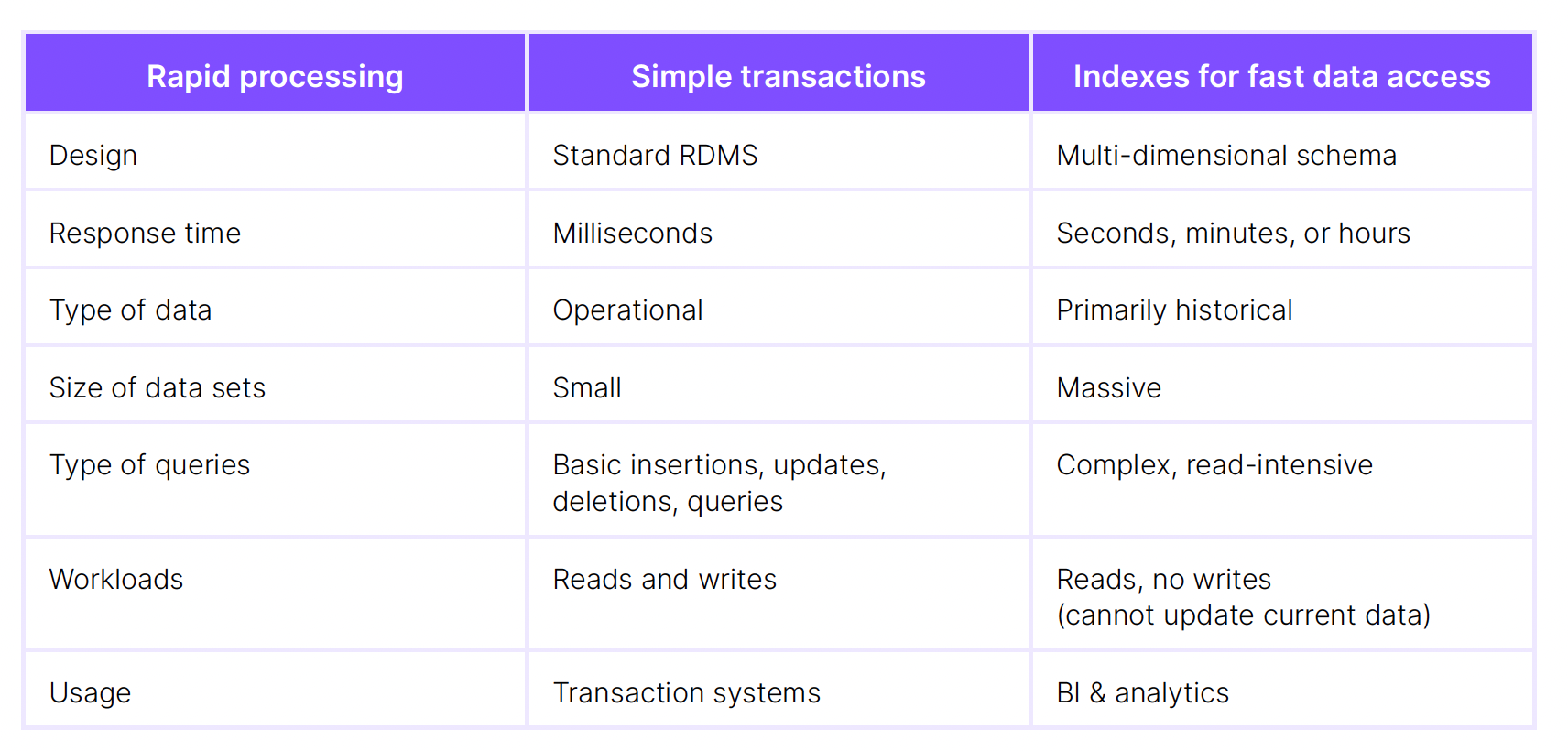

Another popular database system is OLAP (online analytical processing). OLAP is built for data analysis, while OLTP is used to power business operations. OLAP databases help generate business insights. However, OLTP databases actually run the business processes that allow a company to function, such as payment systems. See the table below for a side-by-side comparison of OLTP and OLAP.

OLTP vs. OLAP

Companies use OLTP to support their critical business processes, and OLAP to generate business intelligence from historical data. OLTP enables businesses to operate, while OLAP allows business leaders to make decisions.

The Problem: Running Complex Queries on OLTP

OLTP databases are best suited for simple queries. These simple queries leverage a small number of database records to perform basic transactions, such as calculating account balances. This reduces the workload on the system. Nevertheless, there are often cases when performing complex, read-intensive queries on an OLTP database might end up occurring.

As an example, consider a mortgage underwriting company. In this scenario, the mortgage company needs to use borrower data to determine if an applicant qualifies for a mortgage. The company’s OLTP database captures operational data from front end systems, online loan sites, and backend databases.

However, the underlying queries that calculate loan eligibility are complicated, requiring joins and other complex SQL instructions. The OLTP database slows down and destabilizes when performing complex queries that join across tables, filter across time windows, and compute aggregations. As a result, mortgage determinations take longer, causing the company to fund less loans.

This is how OLTP systems can easily strain due to read query-intensive workloads. As teams start to run more complex queries on OLTP databases, the services offered by the system begin to deteriorate. As a result, teams need to scale up their operations. They must buy more software and hire more workers. This is expensive, but often unavoidable, since OLTP systems are mission-critical.

To relieve pressure on the core database of record, data teams need to find another approach to OLTP. They must protect the core database and the business on which it relies. And one way to do this is by offloading expensive (i.e. high compute) queries from OLTP databases. This is the rationale for OLTP offload.

The Solution: OLTP Offload

Since OLTP databases are essential for business operations, running complex queries on them can slow down critical processes, including payments and transactions. These complex reads in the OLTP system can create strain, leading to downtime, missed latency SLAs, and excessive costs.

OLTP offload offers a solution. With offload, teams transfer expensive, complex queries off of OLTP databases. Instead, they perform these queries on read replicas, separate databases, or other data platforms.

This allows OLTP systems to deliver reliable and performant services without strain from read-intensive query workloads. OLTP offloading offers teams a number of advantages, including:

- Fresh, responsive results - By removing complex queries from the OLTP system, the database can perform transactions faster. Data freshness is preserved, and the results are correct.

- Improved reliability - When teams remove expensive queries, they lessen the strain on OLTP databases. This eliminates destabilization and downtime, which can cause lost revenue and support for a brand or service.

- Cost savings - OLTP offload can save teams money when performed on data platforms that are more accommodating of complex queries.

Many OLTP offload methods do not realize all three of these advantages. Teams need an operational data store to satisfy all three. An OLTP offload could arise for many different use cases. But some standard ones include:

- Queries from dashboards or UIs taxing the database - Dashboards and front-end interfaces query OLTP databases upon user request at unpredictable intervals. The random execution of complex queries can overwhelm OLTP systems.

- Teams writing services/jobs that take data from the OLTP system, denormalize it, and write it back - Denormalization jobs take data from the core OLTP system, processes, and writes it back to reporting tables. This can be done in batches, or incrementally on write via techniques like triggers. In either case, this takes up developer time and adds complexity. This also increases the chances of bugs since developers are doing work that SQL would normally do.

- The presence of materialized views - Materialized views store query results in memory. This is effective for denormalizing data, but the results are not fresh. As new data enters the database, materialized views are not automatically updated. They must be re-run in order to reflect current results. This constant query recomputation is expensive.

- The presence of read replicas - Teams use read replicas to query data, so they can take the load off core OLTP databases. However, read replicas are still not optimized for complex queries, since they mirror the OLTP architecture. There is still a lag in generating results. As such, read replicas do not result in fresh, responsive results.

These are some of the most common use cases for OLTP offload. They are not, however, the only ones.

Download the Free White Paper

Download our free white paper for a full overview of how OLTP offload can help your business. Find out how to move your expensive workloads from your OLTP database to an operational data store.

And keep an eye out for the next blog in our series, containing all the different ways data teams can perform OLTP offload.