What Happened to the Operational Data Store?

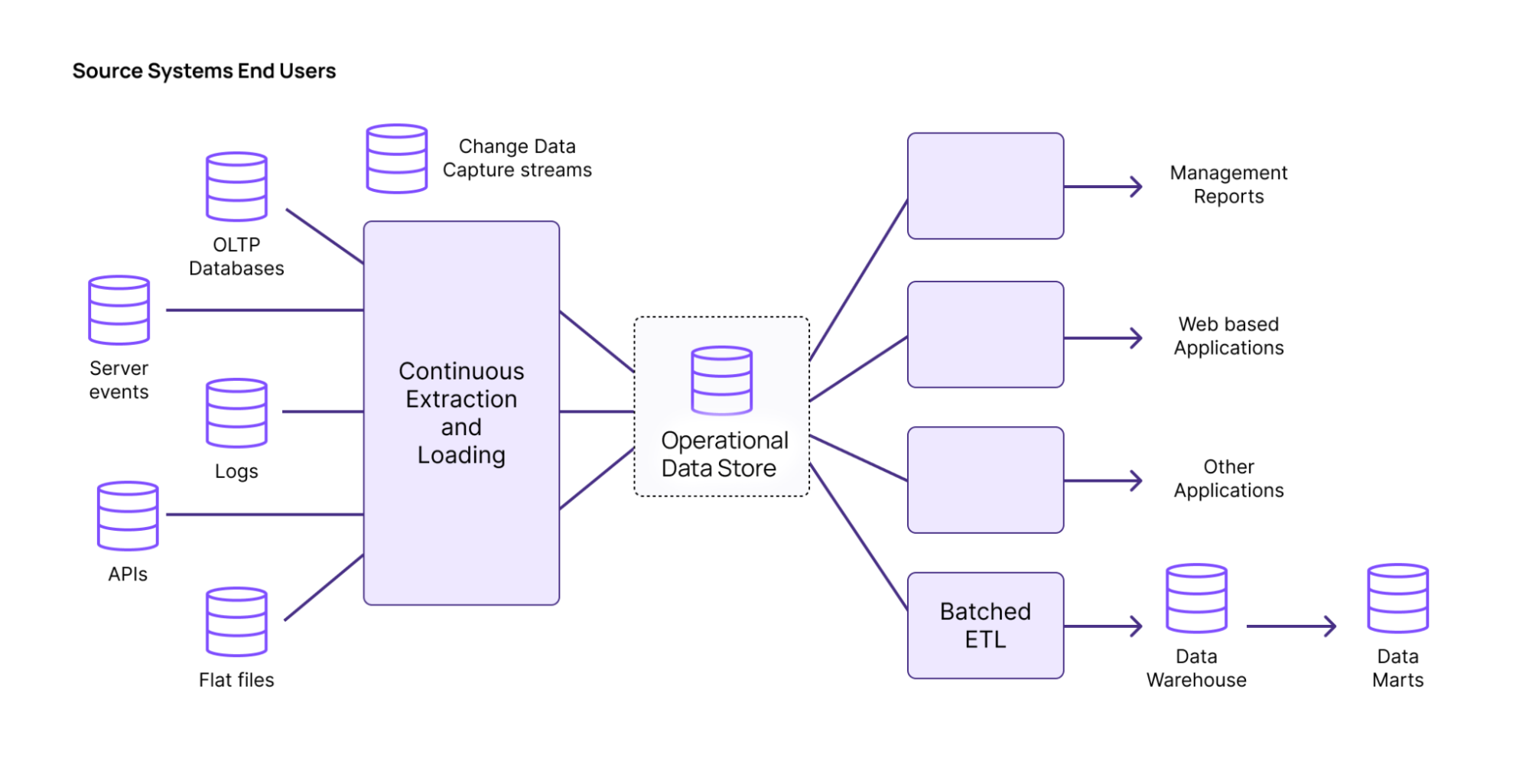

For many years, companies included an operational data store (ODS) in their standard data architecture. They served as a central repository for integrating data from various source systems, providing real-time, consolidated access to it. Operational data stores maintained real-time or near real-time data, and were the first points that allowed access to denormalized data across databases. The ODS could push data to a data warehouse, and then on to data marts, using additional ETL steps.

But you don't really see this pattern these days.

A brief history of the cloud data warehouse

Long story short, a new technology emerged that bypassed the ODS: the cloud data warehouse.

Cloud data warehouses allowed companies to consolidate many parts of their physical data footprint into a single elastically scaling cloud footprint. Consider data marts, one of the first thing eaten up by cloud data warehouses. In the past, a big reason departments had their own physical data warehouse infrastructure to power their data marts was so that they could have exclusive compute pools.

However, the modern cloud data warehouse separates compute and storage, unlike earlier on-premise data warehouses. This means that if a second department starts reading your data, you incur no overhead or performance penalty. This was not true in the past. You had to guard your physical resources carefully, using ETL between marts.

Separation of storage and compute enabled companies to share data directly without needing separate systems for each team: a new data mart is now just a CREATE VIRTUAL WAREHOUSE statement away. Data marts now live on as purely logical units of the architecture, but physically, they’re all part of the same cloud data warehouse footprint.

There are big benefits to this architecture: there’s more sharing of both the data and the hard work of modeling it. For instance, departments can now build on each other’s modeled datasets. In the best case, more useful work gets done across the board. In the worst case, it’s a complex soup of recursion and mashing dbt run while you trigger rebuilds of untold upstream dependencies.

And cloud data warehouses have continued to evolve. A modern data lakehouse is very similar to a cloud data warehouse. But with a lakehouse, you now can have heterogeneous compute systems, with the SQL compute engine being just one of them. All of these compute systems coordinate through the shared data lake. This means you can bring more types of data and run more types of workloads. You can mix and match. For instance, the output of a Python job with unstructured inputs could be a structured, relational dataset that can be then treated as the input to a classical SQL data warehousing job.

But it's not a perfect fit for operational work. Let's consider why.

What does an Operational Data Store do?

Operational data stores, like data warehouses, live downstream of other sources of data. So the first step is loading in this data. While a data warehouse can roll these changes up into batches that are loaded infrequently, an operational data store needs to incorporate them immediately, if it is to be valuable for operational work.

This means that an operational data store needs to be able to sustain a high volume of writes to ingest the data. An ODS also needs to serve a high volume of compute intensive reads for the operational read workloads. In contrast, cloud data warehouses are optimized for running compute intensive jobs, but only in the presence of a low volume of batched writes.

This means that operational data stores have requirements that combine OLTP systems with OLAP systems. And many companies that we’ve worked with have used OLTP databases, like Oracle, as their operational data stores. The OLAP architecture is a poor fit for an operational workload. So why do these workloads move?

The cloud data warehouse was re-architected for the cloud…

The big technical innovation underpinning cloud data warehouses was the separation of storage and compute. Prior to this, data warehouses were built using a “shared nothing” distributed architecture, but each node needed tightly-coupled storage and compute infrastructure.

This made sense for on-premise installations, where the hardware was always going to be procured in one large chunk. But the cloud’s best advantage is the ability to scale up and use compute resources opportunistically as workloads develop. Renting ten machines for an hour is the same price as renting one machine for ten hours, and it’s a far better user experience if the system scales in its ability to do work.

Cloud data warehouses were architected to have great performance when separated from the storage. This enables that wonderful elastic scalability, as well as the data sharing benefits of purely logical data marts with the same footprint. But they also meant that companies could grow their data footprint incrementally, rather than buying a very expensive piece of their data stack in one go.

… the operational data store was not

Meanwhile, operational data stores were not undergoing this transformation. They were designed for maximum throughput, and that meant using expensive attached disks and competing on performance benchmarks.

But as data footprints grew, centralizing more and more data through a single operational database became increasingly less tenable. The data volume increased, the number of source systems increased. This means more joins, a compute heavy task. Routing all of your company’s data through a single high performance bottleneck was risky.

Operational workloads need access to the source data, and without an ODS, that workload is going in one of the following two directions.

Path 1: Abuse your data warehouse

Since cloud data warehouses have this elastic scaleup capability, teams can also put their new operational workloads on the cloud data warehouse rather than buying an expensive ODS. Why incorporate an ODS into your data architecture at all? It’s just one more virtual warehouse in your system.

However, neither data lakehouses nor data warehouses are built for operational use cases. They are batch systems that are only economical when you refresh them infrequently. In contrast, an ODS needs to ingest real-time data from operational systems.

Teams can abuse their data warehouses by frequently re-running workloads to provide fresh results for operational use cases. This is very costly, and eventually the data warehouse will reach freshness limitations. Fundamentally, the data lake is too far downstream to be fresh. By the time the data hits S3 in a batched update, it’s too stale. But it's a pot that boils slowly.

Path 2: Build streaming infrastructure

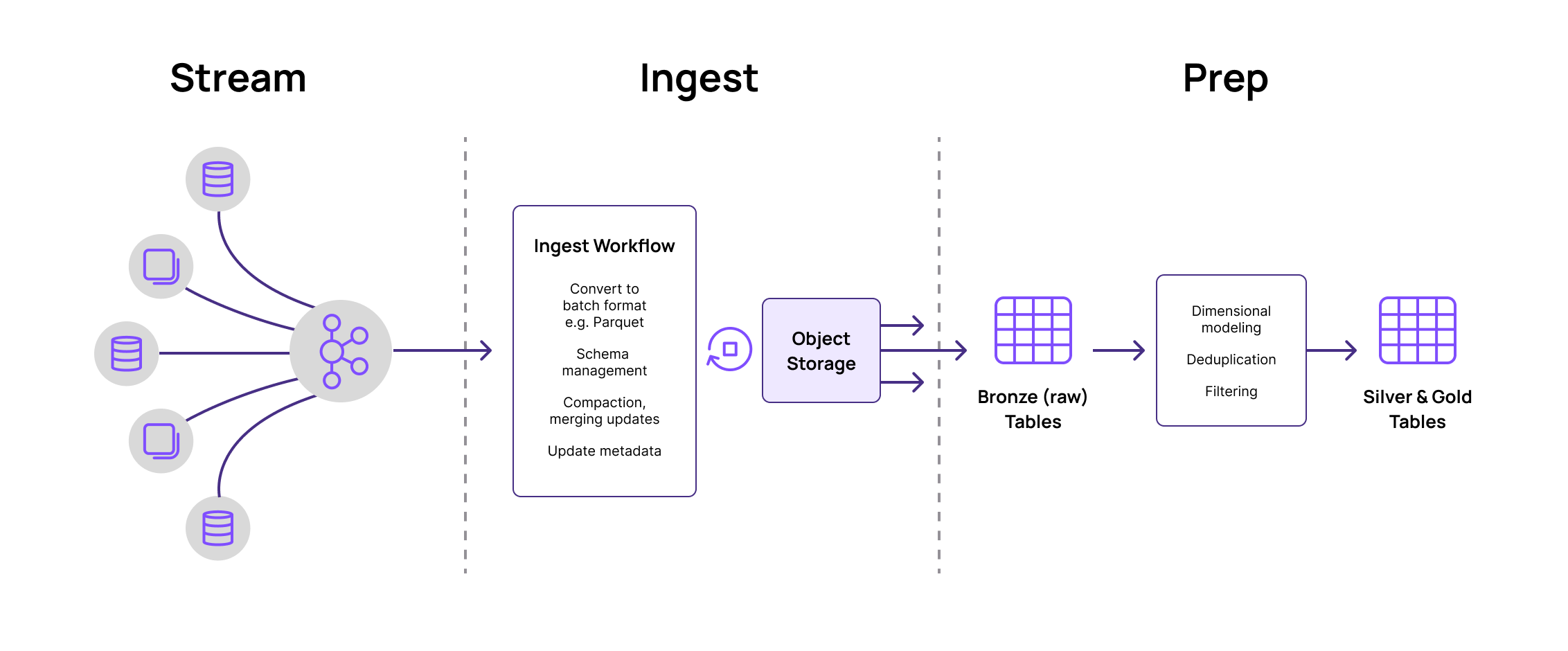

The second option is building streaming infrastructure. To power operational use cases, teams turn to Kafka and Flink to build real-time experiences. On the plus side, this infrastructure is decoupled from the upstream OLTP databases, and has all the low-latency and high-throughput capabilities that are needed for these workloads.

On the other hand, you’re on your own! You’re fundamentally now building your own disaggregated operational data store from parts. And working with streaming data isn’t easy. Teams must join multiple streams of data, model this data in code, and react to changes in schemas, often through cumbersome logic. Hiring new talent, managing the system, and servicing complex architectures all make stream processors an expensive solution. Fundamentally, stream processors are not a database.

Additionally, the data models that you move into the streaming pipeline typically require joining data in stream. And large, stateful joins of slowly changing dimensions are the area where stream processors have the most performance problems. So moving these kinds of workloads is painful.

Finally, streaming applications are not written in SQL. The modeling is done in Java and Scala code. So producing models is not nearly as simple as creating them in standard SQL.

There are potential workarounds that teams sometimes employ. One option is to model everything once, in your streaming system, and then sink the results to your batch-based data lakehouse. In other words, you’re "shifting left" some of your modeling from your batch layer to your streaming layer.

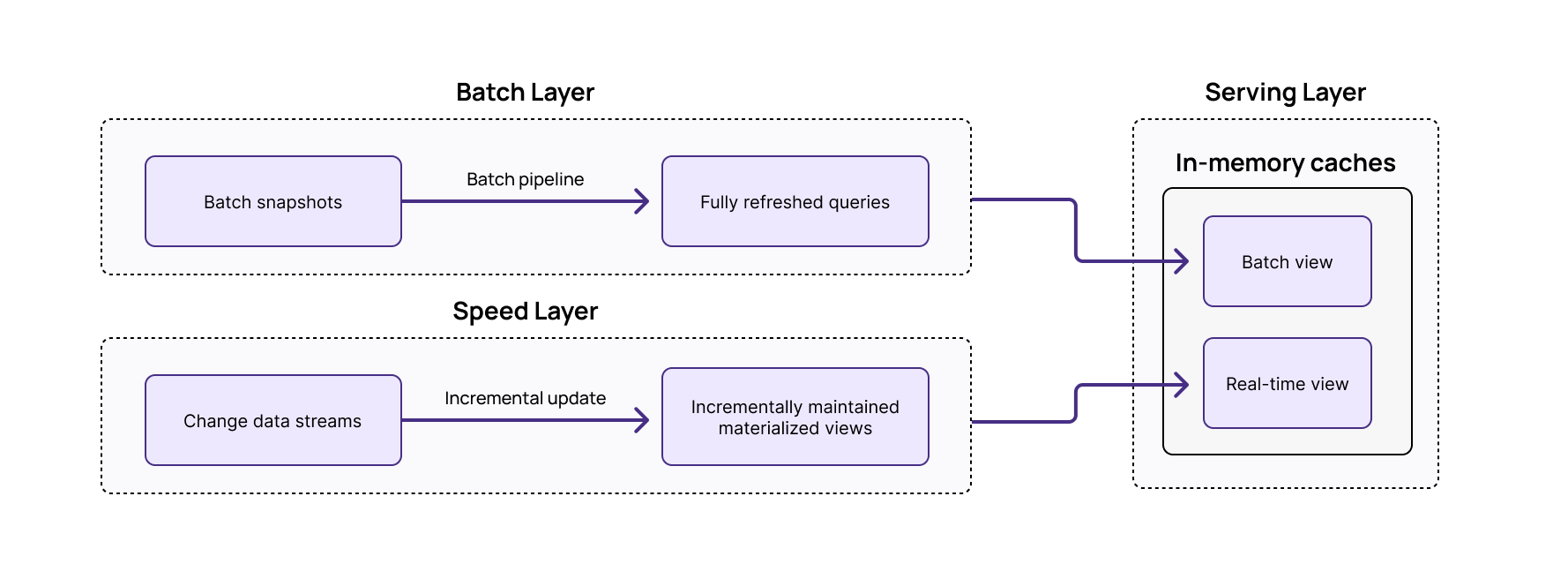

And that’s why teams often turn to parallel infrastructures. In this scenario, you only model those streams that you need to support your operational/real-time use cases. Most of the data only goes through the batch pipeline, where it is easily modeled in SQL. After all, the data warehouses can eat terabytes of joins without breaking a sweat.

Of course, everyone wants the benefits of streaming systems in their data architecture. But we shouldn’t have to become Flink or Kafka experts to get it.

This is getting out of hand… Now there are two of them!

The reality is that most companies do… both. Data teams are typically too busy dealing with ad hoc requests, triaging data infrastructure issues, and clearing out the data queue to consistently focus on building a new system. So they usually go with the "abuse the data warehouse" path and pay high bills for always-on virtual warehouses.

But production teams often opt for building stream processing infrastructure that they feel comfortable managing, where they manually do the work of building and maintaining their own in-house database. So their data architecture mostly looks like this:

You can’t go home again: an Operational Data Store needs to live in the cloud world

The status quo isn’t great, but neither is the prospect of pulling operational workloads into a single expensive HTAP database. What would an operational data store of the future need to make things meaningfully better?

- Work natively on streams of data - You need an ODS that offers native support for streaming data. Building and maintaining streaming systems is difficult and time-consuming. You shouldn’t have to become an expert in Flink or Kafka to leverage data streams. Nevertheless, the streams already exist. And they are the optimal way to move the data around.

- Easily perform complex joins - The bread and butter ODS workload is joining disparate streams of data together. A reimagined ODS needs to join sources on the fly using SQL, with strong consistency, while keeping the results up to date. This unlocks use cases that require high volumes of fresh data, such as fraud detection or supply chain optimization.

- Think declaratively… You probably want to avoid custom development work, or building new pipelines. The operational data store of the future allows you to manipulate streaming data in standard SQL.

- …but act incrementally. You don’t want an ODS that wastes compute (and is hence expensive) because it recalculates results every few milliseconds. You need an ODS that incrementally updates your views as data comes in, ensuring that you don’t use excessive compute. This delivers significant cost savings.

- Peel off workloads over time, not requiring a huge upfront cost investment on day 1 - An ideal operational data store does not require a huge upfront cost investment. You need a cloud-based ODS that gradually subsumes your operational workloads, while paying only for the exact compute required, just like a cloud data warehouse.

- Integrate with systems downstream - The ODS of the future pushes updates to downstream systems like S3 to enable historical reporting in a cloud data warehouse, pushes updates to Kafka to support event driven architectures, and is queryable by clients in SQL.

- Share data modeling with the lakehouse for data analysts to use - You want an operational data store that can share data modeling with your lakehouse downstream. This allows data analysts to leverage the modeled data in their analysis, and avoids the cost of recomputation.

Ultimately, a cloud-native operational data store is a powerful architectural pattern when used correctly: lowering costs, streamlining data modeling, and simplifying the task of delivering operational workloads without large costs or development overheads.

But it makes sense that people shied away from it when all they had were databases designed for an on-premise world. It's time for a comeback.