12.03.2025

Summarizing cluster health

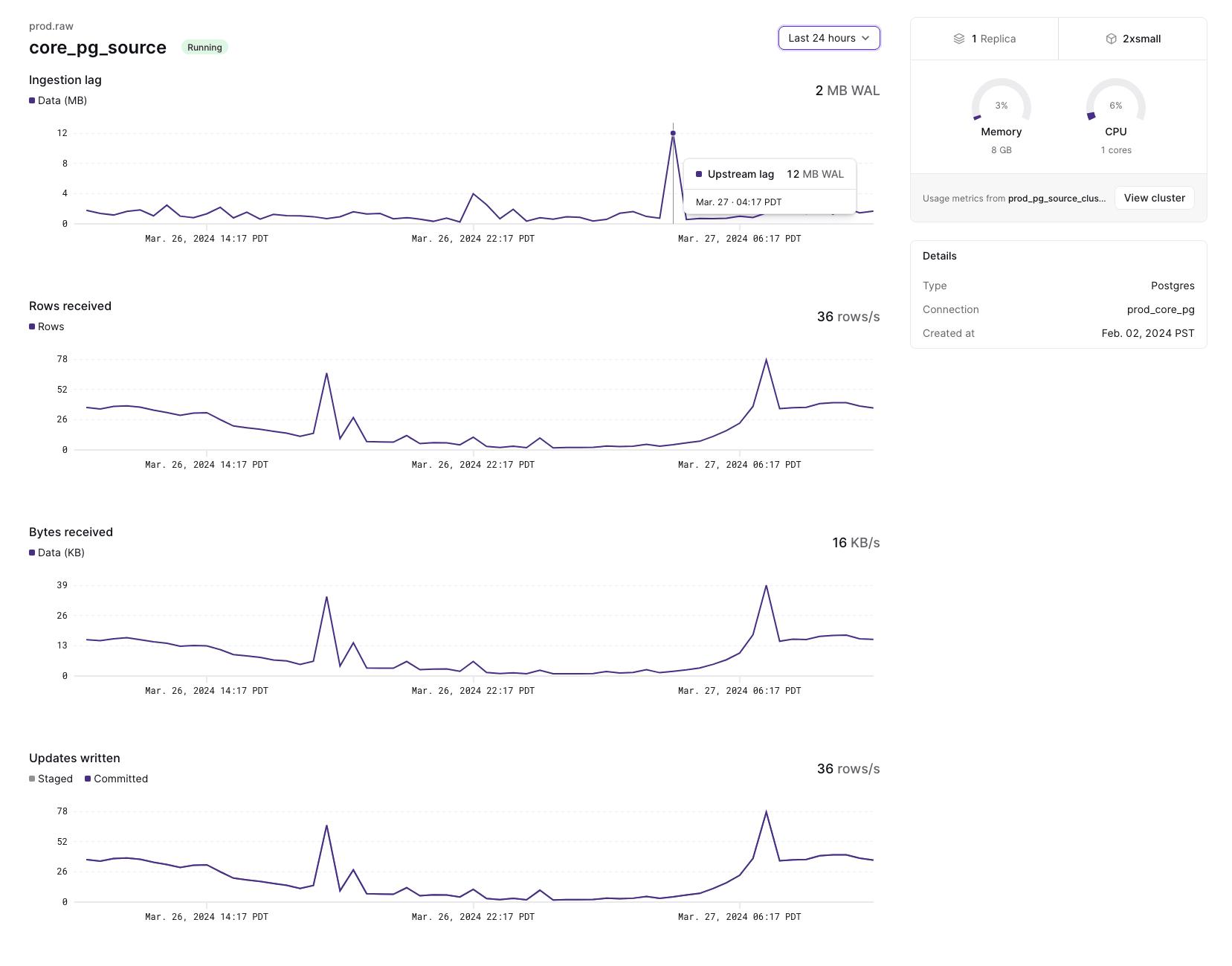

The EXPLAIN ANALYZE statement provides insight into how a given index or materialized view is running. We’ve expanded EXPLAIN ANALYZE to let you analyze your current cluster, not just an individual object. EXPLAIN ANALYZE CLUSTER) presents a summary of every object installed on your current cluster. It’s possible to summarize CPU time spent and memory consumed per object on the current cluster. It’s also possible to see whether any objects on the current cluster have skewed operators, where work isn’t evenly distributed among workers.

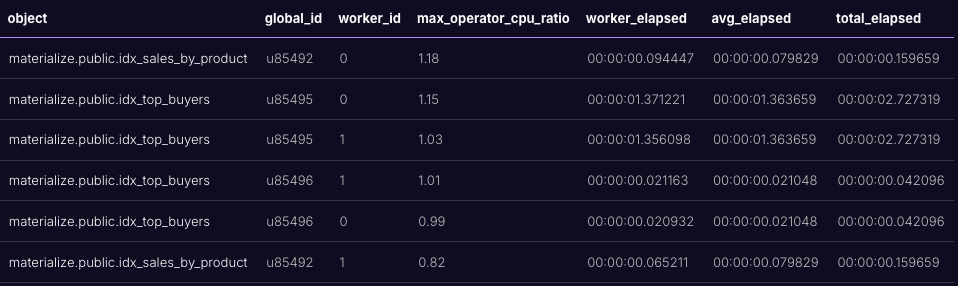

If we run EXPLAIN ANALYZE CLUSTER CPU WITH SKEW on our introspection debugging blogpost’s queries, we get output like the following:

The max_operator_cpu_ratios here are perfectly reasonable. Ratios above above 2 merit investigation using EXPLAIN ANALYZE on that particular object. Objects comprise many operators, and we’re reporting the worst ratio for any operator---a high CPU or memory ratio in one operator is something to look at, but not inherently problematic. Using EXPLAIN ANALYZE CLUSTER ... WITH SKEW, you can quickly identify which objects are worth looking at more deeply.

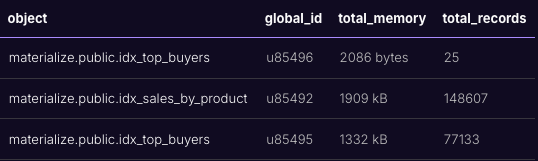

If we run EXPLAIN ANALYZE CLUSTER MEMORY, we get output like the following:

Just like other EXPLAIN ANALYZE statements, you can run EXPLAIN ANALYZE CLUSTER ... AS SQL to get the SQL query on our introspection infrastructure that generates the summary. If you have a particular measure of cluster health you’re interested in, those queries make a good starting point. Since EXPLAIN ANALYZE statements query our introspection infrastructure, these statements implicitly work on your current cluster; if you have more than one cluster, be sure to SET CLUSTER appropriately before running these commands.

Finally, the EXPLAIN syntax isn’t a stable interface, and we’ll be improving on the output here over time; we’d be happy to hear your feedback. Until then, you can read more about EXPLAIN ANALYZE CLUSTER in the docs!